Atom

Atom

From Wikipedia, the free encyclopedia

| Atom | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

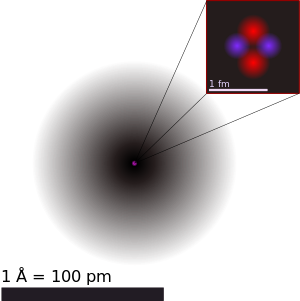

| This illustrates the nucleus (pink) and the electron cloud distribution (black) of the HeliumÅngström, or 10-10 m. atom. The nucleus (upper right) is in reality spherically symmetric, although this is not always the case for more complicated nuclei. For size comparison, the black bar is 1 | ||||||||||

| Classification | ||||||||||

| ||||||||||

| Properties | ||||||||||

|

In chemistry and physics, an atom (Greekἄτομος or átomos meaning "the smallest indivisible particle of matter, i.e. something that cannot be divided") is the smallest particle characterizing a chemical element. An atom consists of a dense nucleus of positively chargedprotons and electrically neutral neutrons, surrounded by a much larger electron cloudelectrons. When the number of protons matches the number of electrons, an atom is electrically neutral; otherwise it is an ion and has a net charge. The total protons in an atom defines the chemical element to which it belongs, while the number of neutrons determines the isotope of the element. consisting of negatively charged

The concept of the atom as an indivisible component of matter was proposed by early Indian and Greek philosophers. Early chemists, such as Robert Boyle, Antoine Lavoisier and John Dalton, provided a physical basis for this idea by showing that certain substances could not be further broken down by chemical methods. In 1897 J. J. Thomson discovered that the atom contained charged particles called electrons. Ten years later, Ernest Rutherford showed that most of an atom's mass and its positive charge were located at the core. The emergent field of quantum mechanics allowed Niels Bohr, Erwin Schrödinger[2][3] and others to produce an accurate mathematical model of the structure and properties of the atom.

Relative to everyday experience, atoms are minuscule objects with proportionately tiny masses that can only be observed individually using special instruments such as the scanning tunneling microscope. As an example, a single carat diamond with a mass of 0.2 g contains about 10 sextillion (1022) atoms of carbon.[4] More than 99.9% of an atom's mass is concentrated in the nucleus,[5] with protons and neutrons having about equal mass. Depending on the number of protons and neutrons, the nucleus may be unstable and subject to radioactive decay.[6] The electrons surrounding the nucleus occupy a set of stable energy levels, or orbitals, and they can transition between these states by the absorption or emission of photons that match the energy differences between the levels. The electrons determine the chemical properties of an element, and strongly influence an atom's magnetic moment.

History

The concept that matter is composed of discrete units and can not be divided into arbitrarily tiny or small quantities has been around for thousands of years, but these ideas were founded in abstract, philosophical reasoning rather than experimentation and empirical observation. The nature of atoms in philosophy varied considerably over time and between cultures and schools, and often had spiritual elements. Nevertheless, the basic idea of the atom was adopted by scientists thousands of years later because it elegantly explained new discoveries in the field of chemistry.[7]

The earliest references to the concept of atoms date back to ancient India in the 6th century BCE.[8] The Nyaya and Vaisheshika schools developed elaborate theories of how atoms combined into more complex objects (first in pairs, then trios of pairs).[9] The references to atoms in the West emerged a century later from Leucippus whose student, Democritus, systemized his views. In around 450 BCE, Democritus coined the term atomos, which meant "uncuttable". Though both the Indian and Greek concepts of the atom were based purely on philosophy, modern science has retained the name coined by Democritus.[7]

Robert Boyle published The Sceptical Chymist in 1661 in which he argued that matter was composed of various combinations of different "corpuscules" or atoms, rather than the classical elements of air, earth, fire and water.[10] The term element came to be defined, in 1789 by Antoine Lavoisier, to mean basic substances that could not be further broken down by the methods of chemistry.[11]

In 1803, John Dalton used the concept of atoms to explain why elements always reacted in simple proportions, and why certain gases dissolved better in water than others. He proposed that each element consists of atoms of a single, unique type, and that these atoms could join to each other, to form chemical compounds.[12][13]

In 1827 a British botanist Robert Brown used a microscope to look at dust grains floating in water and discovered that they moved about erratically—a phenomenon that became known as "Brownian motion". J. Desaulx suggested in 1877 that the phenomenon was caused by the thermal motion of water molecules, and in 1905 Albert Einstein produced the first mathematical analysis of the motion, thus confirming the hypothesis.[14][15]

In 1897, JJ Thomson, through his work on cathode rays, discovered the electron and its subatomic nature, which destroyed the concept of atoms as being indivisible units. Later, Thomson created a technique for separating different types of atoms through his work on ionized gases, which subsequently led to the discovery of isotopes.[16]

Thomson believed that the electrons were distributed evenly throughout the atom, balanced by the presence of a uniform sea of positive charge. However, in 1909, the gold foil experiment was interpreted by Ernest Rutherford as suggesting that the positive charge of an atom and most of its mass was concentrated in a nucleus at the center of the atom (the Rutherford model), with the electrons orbiting it like planets around a sun. In 1913, Niels Bohr added quantum mechanics[17] into this model, which now stated that the electrons were locked or confined into clearly defined orbits, and could jump between these, but could not freely spiral inward or outward in intermediate states.

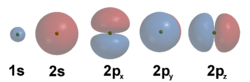

In 1926, Erwin Schrödinger, using Louis DeBroglie's 1924 proposal that particles behave to an extent like waves, developed a mathematical model of the atom that described the electrons as three-dimensional waveforms, rather than point particles. A consequence of using waveforms to describe electrons is that it is mathematically impossible to obtain precise values for both the position and momentum of a particle; this became known as the uncertainty principle. In this concept, for each measurement of a position one could only obtain a range of probable values for momentum, and vice versa. Although this model was difficult to visually conceptualize, it was able to explain observations of atomic behavior that previous models could not, such as certain structural and spectral patterns of atoms bigger than hydrogen. Thus, the planetary model of the atom was discarded in favor of one that described orbital zones around the nucleus where a given electron is most likely to exist.[18][19]

In 1913, Frederick Soddy discovered that there appeared to be more than one element at each position on the atomic table. The term isotope was coined by Margaret Todd as a suitable name for these elements. The development of the mass spectrometer allowed the exact mass of atoms to be measured. Francis William Aston used this technique to demonstrate that elements had isotopes of different mass. These isotopes varied by integer amounts, called the Whole Number Rule.[20] The explanation for these different atomic isotopes awaited the discovery of the neutron, a neutral-charged particle with a mass similar to the proton, by James Chadwick in 1932. Isotopes were then explained as elements with the same number of protons, but different numbers of neutrons within the nucleus.[21]

Around 1985, Steven Chu and co-workers at Bell Labs developed a technique for lowering the temperatures of atoms using lasers. In the same year, a team led by William D. Phillips managed to contain atoms of sodium in a magnetic trap. The combination of these two techniques and a method based on the Doppler Effect, developed by Claude Cohen-Tannoudji and his group, allows small numbers of atoms to be cooled to very low temperatures. This allows the atoms to be studied with great precision, and later led to the discovery of Bose-Einstein condensation.[22]

Components

Subatomic particles

Though the word atom originally denoted a particle that cannot be cut into smaller particles, in modern scientific usage the 'atom' is composed of various subatomic particles. The component particles of an atom consist of the electron, the proton and, for atoms other than hydrogen-1, the neutron.

The electron is by far the least massive of these particles at 9.11×10-31 kg, with a negative electrical charge and a size that is too small to be measured using available techniques.[23]-27 kg, although atomic binding energy changes can reduce this. Neutrons have no electrical charge and have a free mass of 1,839 times the mass of electrons,[24] or 1.6929x10-27 kg. Neutrons and protons have comparable dimensions—on the order of 2.5×10-15 m—although the 'surface' of these particles is not very sharply defined.[25] Protons have a positive charge and a mass 1,836 times that of the electron, at 1.6726×10

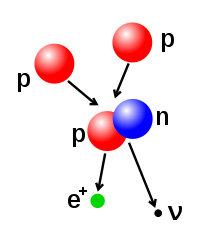

In the Standard Model of physics, both protons and neutrons are composed of elementary particles called quarks. The quark is a type of fermion, one of the two basic constituents of matter—the other being the lepton, of which the electron is an example. There are six different types of quarks, and each has a fractional electric charge of either +2/3 or −1/3. Protons are composed of two up quarks and one down quark, while a neutron consists of one up quark and two down quarks. This distinction accounts for the difference in mass and charge between the two particles. The quarks are held together by the strong nuclear force, which is mediated by gluons. The gluon is a member of the family of bosons, which are elementary particles that mediate physical forces.[26][27]

Nucleus

All of the bound protons and neutrons in an atom make up a dense, massive atomic nucleus, and are collectively called nucleons. Although the positive charge of protons causes them to repel each other, they are bound together with the neutrons by a short-ranged attractive potential called the residual strong force. At distances smaller than 2.5 fm, the residual strong force is stronger than the coulomb force, so it is able to overcome the mutual repulsion between the protons in the nucleus. The radius of a nucleus is approximately equal to ![\begin{smallmatrix}1.2 \cdot \sqrt[3]{A}\end{smallmatrix}](http://upload.wikimedia.org/math/c/2/8/c28342232188576708b1a274fb3bef2f.png) fm, where A is total nucleons. This is much smaller than the radius of the atom, which is on the order of 105 fm.[28]

fm, where A is total nucleons. This is much smaller than the radius of the atom, which is on the order of 105 fm.[28]

Atoms of the same element have the same number of protons, called the atomic number. Within a single element, the number of neutrons may vary, determining the isotope of that element. The number of neutrons relative to the protons determines the stability of the nucleus, with certain isotopes undergoing radioactive decay.[29]

The pauli exclusion principle is a quantum mechanical effect that prohibits identical fermions[30] (such as multiple protons) from occupying the same quantum state at the same time. Thus every proton in the nucleus must occupy a different state with its own energy level, and the same rule applies to all of the neutrons. To minimize the energy of the nucleus, the lowest allowed energy levels are preferentially occupied by the nucleons. Hence a nucleus with a different number of protons than neutrons can potentially drop to a lower energy state through a radioactive decay that causes the number of protons and neutrons to more closely match. With increasing atomic number, the mutual repulsion of the protons requires an increasing proportion of neutrons to maintain the stability of the nucleus, which slightly modifies the trend of equal numbers of protons to neutrons.

The number of protons and neutrons in the atomic nucleus can be modified, although this can require very high energies because of the strong force. Nuclear fusion occurs when additional protons or neutrons collide with the nucleus. Nuclear fission is the opposite process, causing the nucleus to emit nucleons—usually through radioactive decay. The nucleus can also be modified through bombardment by high energy subatomic particles or photons. In such processes which change the number of protons in a nucleus, the atom becomes an atom of a different chemical element.[31][32]

The fusion of two nuclei that have lower atomic numbers than iron and nickel is an exothermic process that releases more energy than is required to bring them together. It is this energy-releasing process that makes nuclear fusion in stars a self-sustaining reaction. The net loss of energy from the fusion reaction means that the mass of the fused nuclei is lower than the combined mass of the individual nuclei. The energy released (E) is described by Albert Einstein's mass–energy equivalence formula, E = mc², where m is the mass loss and c is the speed of light.[33]

The mass of the nucleus is less than the sum of the masses of the separate particles. The difference between these two values is binding energy of the nucleus. It is the energy that is emitted when the individual particles come together to form the nucleus.[28] The binding energy per nucleon increases with increasing atomic number until iron or nickel is reached.[34] For heavier nuclei, the binding energy begins to decrease. That means fusion processes with nuclei that have higher atomic numbers is an endothermic process. (These more massive nuclei can not undergo an energy-producing fusion reaction that can sustain the hydrostatic equilibrium of a star.) In atoms with high or very low ratios of protons to neutrons, the binding energy becomes negative, resulting in an unstable nucleus.[30]

Electron cloud

The electrons in an atom are bound to the protons in the nucleus by the electromagnetic force. Electrons, as with other particles, have properties of both a particle and a wave. The electron cloud is a region where each electron resides within a type of three-dimensional standing waveelectrostatic potential well that surrounds the much smaller nucleus. This standing wave condition is characterized by an atomic orbital, which is a mathematical function that defines the probability that an electron will appear to be at a particular location when its position is measured. Only a discrete (or quantized) set of these orbitals exist around the nucleus, as other possible wave patterns produce interference effects that would destroy the standing wave.[35] inside the

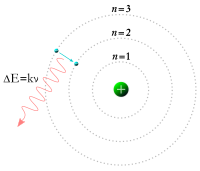

Each atomic orbital corresponds to a particular energy level of the electron. The electron can change its state to a higher energy level by absorbing a photon with sufficient energy to boost it into the new quantum state. Likewise, through spontaneous emission, an electron in a higher energy state can drop to a lower energy state while radiating the excess energy as a photon. These characteristic energy values, defined by the differences in the energies of the quantum states, are responsible for atomic spectral lines.[35]

The number of electrons associated with an atom is readily changed because of the lower energy of binding of electrons, as measured by the ionization potential, when compared to the binding energy of the nucleus. Atoms are electrically neutral if they have an equal number of protons and electrons. Atoms which have either a deficit or a surplus of electrons are called ions. Electrons that are furthest from the nucleus may be transferred to other nearby atoms or shared between atoms. By this mechanism atoms are able to bond into molecules and other types of chemical compounds like ionic and covalent network crystals.[36]

Properties

An element consists of atoms that have the same number of protons in their nuclei. Each element can have multiple isotopes—nuclei with specific numbers of protons and neutrons. Even hydrogen, the simplest of elements, has isotopes deuterium and tritium.[37] The known elements form a continual range of atomic numbers from hydrogen up to element 118, ununoctium.[38] All known isotopes of elements with atomic numbers greater than 82 are radioactive.[39][40]

Mass

Because the large majority of an atom's mass comes from the protons and neutrons, the total number of these particles in an atom is called the mass number. The mass of an atom at rest is often expressed using the unified atomic mass unit (u), which is also called a Dalton (Da). This unit is defined as a twelfth of the mass of a free atom of carbon-12, which is approximately 1.66×10-27 kg.[41] Hydrogen, the atom with the lowest mass, has an atomic weight of 1.007825 u.[42] An atom has a mass approximately equal to the mass number times the atomic mass unit.[43]

The mole is defined so that one mole of an element with atomic mass 1 u has a mass of 1 gram. A mole always contains the same number of atoms (or molecules), about 6.022×1023, which is known as the Avogadro constant.[41]

Size

Atoms lack a well-defined outer boundary, so the dimensions are usually described in terms of the distances between two nuclei when the atoms are bonded. The radius varies with the location of an atom on the atomic chart,[44] its chemical bond type, coordination number (which is the total number of neighbors of a central atom in a chemical compound) and spin state.[45]pm, while the largest known is caesium at 298 pm. Although hydrogen has a lower atomic number than helium, the calculated radius of the hydrogen atom is about 70% larger. Atomic dimensions are much smaller than the wavelengths of light (400–700 nm) so they can not be viewed using an optical microscope. However, individual atoms can be observed using a scanning tunneling microscope. The smallest atom is helium with a radius of 31

Various analogies have been used to demonstrate the minuteness of the atom. A typical human hair is about 1 million carbon atoms in width.[46] A single drop of water contains about 2 sextillion (2×1021) atoms of oxygen, and twice the number of hydrogen atoms.[47] If an apple was magnified to the size of the Earth, then the atoms in the apple would be approximately the size of the original apple.[48]

Radioactive decay

Every element has one or more isotopes that have unstable nuclei that are subject to radioactive decay, causing the nucleus to emit particles or electromagnetic radiation. Radioactivity can occur when the radius of a nucleus is large compared to the radius of the strong force, which only acts over distances on the order of 1 fm.[49]

There are three major forms of radioactive decay:[50]

- Alpha decay is caused when the nucleus emits two protons and two neutrons, forming a helium nucleus, or alpha particle. The result of the emission is a new element with a lower atomic number.

- Beta decay is regulated by the weak force, and results from a transformation of a neutron into a proton, or a proton into a neutron. The first is accompanied by the emission of an electron and an antineutrino, while the second causes the emission of a positron and a neutrino. The electron or positron emissions are called beta particles. Beta decay changes the atomic number of the nucleus.

- Gamma decay results from a change in the energy level of the nucleus to a lower state, resulting in the emission of electromagnetic radiation. This can occur following the emission of an alpha or a beta particle from radioactive decay.

There are a number of less common forms of radioactive decay which result in emission of some of these particles by other mechanisms, or different particles.[51]

Each radioactive isotope has a characteristic decay time period—the half life—that is determined by the amount of time needed for half of a sample to decay. This is an exponential decay process that steadily decreases the proportion of the remaining isotope by 50% every half life.[49]

Magnetic moment

Elementary particles possess a quantum mechanical property known as spin. This property is equivalent to the possession of angular momentum, giving this property a directional component, although the particles themselves can not be said to be rotating. Electrons in particular are "spin-½" particles, as are protons and neutrons. The spin of an atom is determined by the spins of its constituent components, and how the spin is distributed and arranged among the sub-atomic components.[52]

The spin of an atom determines its magnetic moment, and consequently the magnetic properties of each element. Bound electrons pair up with each other, with one member of each pair in a spin up state and the other in the opposite, spin down state. Thus these spins tend cancel each other out, reducing the total magnetic dipole moment to zero. In ferromagnetic elements such as iron, the orbitals of neighboring atoms overlap and a lower energy state is achieved when the magnetic moments are aligned with each other. When the magnetic moment of ferromagnetic atoms are lined up, the material can produce a measurable macroscopic field. Paramagnetic materials have atoms with magnetic moments that line up in random directions when no magnetic field is present, but the magnetic moments of the individual atoms line up in the presence of a field.[53][54]

The nucleus of an atom can have a net spin. Normally these nuclei are aligned in random directions because of thermal equilibrium. However, for certain elements (such as xenon-129) it is possible to polarize a significant proportion of the nuclear spin states so that they are aligned in the same direction—a condition called hyperpolarization. This has important applications in magnetic resonance imaging.[55][56]

Energy levels

When an electron is bound to an atom, it has a potential energy that is inversely proportional to its distance from the nucleus. This is measured by the amount of energy needed to unbind the electron from the atom, and is usually given in units of electron volts (eV). In the quantum mechanical model, a bound electron can only occupy a set of states centered on the nucleus, and each state corresponds to a specific energy level. The lowest energy state of a bound electron is called the ground state, while an electron at a higher energy level is in an excited state.[57]

In order for an electron to transition between two different states, it must absorb or emit a photon at an energy matching the difference in the potential energy of those levels. The energy of an emitted photon is proportional to its frequency, so these specific energy levels appear as distinct bands in the electromagnetic spectrum.[58] Each element has a characteristic spectrum that can depend on the nuclear charge, subshells filled by electrons, the electromagnetic interactions between the electrons and other factors.[59]

When a continuous spectrum of energy is passed through a gas or plasma, some of the energy is absorbed by atoms, causing electrons to change their energy level. These excited electrons spontaneously emit this energy as a photon, traveling in a random direction, and so drop back to lower energy levels. Thus the atoms behave like a filter that forms a series of dark absorption bands, or spectral lines, in the energy output. Spectroscopic measurements of the strength and width of the various spectral lines allow the composition and physical properties of a substance to be determined.[60]

If a bound electron is in an excited state, an interacting photon with the proper energy can cause stimulated emission of a photon with a matching energy level. For this to occur, the electron must drop to a lower energy state that has an energy difference matching the energy of the interacting photon. The emitted photon and the interacting photon will then move off in parallel and with matching phases. That is, the wave patterns of the two photons will be synchronized. This physical property is used to make lasers, which can emit a coherent beam of light energy in a narrow frequency band.[61]

When an atom is in an external magnetic field, spectral lines become split into three or more components; a phenomenon called the Zeeman effect. This is caused by the interaction of the magnetic field with the magnetic moment of the atom and its electrons.[62] The presence of an external electric field can cause splitting and shifting of spectral lines by modifying the electron energy levels, a phenomenon called the Stark effect.[63]

Valence

The outermost electron shell of an atom in its uncombined state is known as the valence shell, and the electrons in that shell are called valence electrons. The number of valence electrons determines the bonding behavior with other atoms. Atoms tend to chemically react with each other in a manner that will fill their outer valence shells.[64]

The chemical elements are often displayed in a periodic table that is laid out to display recurring chemical properties, and elements with the same number of valence electrons form a group that is aligned in the same column of the table. (The horizontal rows correspond to the filling of a quantum shell of electrons.) The elements at the far right of the table have their outer shell completely filled with electrons, which results in chemically very inert elements known as the noble gases.[65][66]

Identification

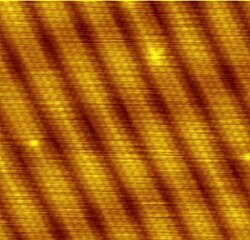

The scanning tunneling microscope is a technique for viewing surfaces at the atomic level. This device uses the quantum tunneling phenomenon, which allows particles to pass through a barrier that it would normally be insurmountable. Electrons tunnel through the vacuum between two planar metal electrodes, on each of which is an adsorbed atom, providing a tunneling-current density that can be measured. Scanning of one atom (taken as the tip) moving past the other (the sample) permits plotting of tip displacement versus lateral separation for a constant current. The calculation shows the extent to which scanning-tunneling-microscope images of an individual atom are visible. It confirms that for low bias, the microscope images the space-averaged dimensions of the electron orbitals across closely-packed energy levels—the Fermi level local density of states.[67][68]

An atom can be ionized by removing one of its electrons. The electrical charge causes the trajectory of an atom to be bent when it passes through a magnetic field. The radius by which the trajectory of a moving ion is turned by the magnetic field is determined by the mass of the atom. The mass spectrometer uses this principle to measure the mass-to-charge ratio of ions. If a sample contains multiple isotopes, the mass spectrometer can determine the proportion of each isotope in the sample by measuring the intensity of the different beams of ions. Techniques to vaporize atoms include inductively coupled plasma atomic emission spectroscopy and inductively coupled plasma mass spectrometry, both of which use a plasma to vaporize samples for analysis.[69]

A more area-selective method is electron energy loss spectroscopy, which measures the energy loss of an electron beam within an transmission electron microscope when it interacts with a portion of a sample. An even more exact method of identification is atom probe spectroscope which combines mass spectroscopy and an ion beam to vaporize and independently determine and map constituent atoms.[70] The more bulk oriented techniques are well suited to measuring atomic constituents throughout at large sample whereas the atomic scale methods find use in analyzing interfaces and semiconductor doping species.

Spectra of excited states can be used to analyze the atomic composition of distant stars. Specific light wavelengths that are contained in the observed light from stars can be separated out and related to the quantized transitions in free gas atoms. These colors can be replicated using a gas discharge lamp containing the same element.[71] Helium was discovered in this way in the spectrum of our sun 23 years before it was found on earth.[72]

Applications

Historically single atoms have been prohibitively small for scientific applications. Recently devices have been constructed that use a single metal atom connected through organic ligands to construct a single electron transistor.[73] Experiments have been carried out by trapping and slowing single atoms using laser cooling in a cavity to gain a better physical understanding of matter.[74]

Origin and current state

At present, atoms form about 4% of the total mass density of the observable universe. The average density is about 0.25 atoms/m3.[75] Within a galaxy such as the Milky Way, atoms have a much higher concentration, with the density of matter in the interstellar medium (ISM) ranging from 105 to 109 atoms/m3.[76] The Sun is believed to be inside a Local Bubble of highly ionized gas, so the density in the solar neighborhood is only about 103 atoms/m3.[77] Stars form from dense clouds in the ISM, and the evolutionary processes of stars result in the steady enrichment of the ISM with more massive elements than hydrogen and helium. Up to 95% of the Milky Way's atoms are concentrated inside stars and the total mass of atoms forms about 10% of the mass of the galaxy.[78]

Atoms are found in different states of matter that depend on the physical conditions, such as temperature and pressure. By varying the conditions, atoms transition between solids, liquids, gases and plasmas. Within a state, the atoms can also exist in different phases. An example of this is solid carbon, which can exist as graphite or diamond. At temperatures close to absolute zero, atoms can form a Bose–Einstein condensate, at which point quantum mechanical effects become apparent on a macroscopic scale.[79][80]

Nucleosynthesis

The first nuclei of elements one through five, including most of the hydrogen, helium, lithium, essentially all of the deuterium and helium-3, and perhaps some of the beryllium and boron in the universe,[81] were theoretically created during big bang nucleosynthesis, about 3 minutes after the big bang.[82][83] The first atoms (complete with bound electrons) were theoretically created 380,000 years after the big bang; an epoch called recombination, when the expanding universe cooled enough to allow electrons to become attached to nuclei.[84] Since then, atomic nuclei have been combined in stars through the process of nuclear fusion to generate elements up to iron.[85]

Some atoms such as lithium-6 are generated in space through cosmic ray spallation.[86] This occurs when a high energy proton strikes an atomic nucleus, causing large numbers of nucleons to be ejected. Elements heavier than iron were generated in supernovae through the r-processAGB stars through the s-process, both of which involve the capture of neutrons by atomic nuclei.[87] Some elements, such as lead, formed largely through the radioactive decay of heavier elements.[88] and in

Earth

Most of the atoms that make up the Earth and its inhabitants were present in their current form in the nebula that collapsed out of a molecular cloud to form the solar system. The rest are the result of radioactive decay, and their relative proportion can be used to determine the age of the earth through radiometric dating.[89][90] Most of the helium in the crust of the Earth (about 99% of the helium from gas wells, as shown by its lower abundance of helium-3) is a product of alpha-decay.[91]

There are a few trace atoms on Earth that were not present at the beginning (i.e. not "primordial"), nor are results of radioactive decay. Carbon-14 is continuously generated by cosmic rays in the atmosphere.[92] Some atoms on Earth have been artificially generated either deliberately or as by-products of nuclear reactors or explosions.[93][94] Of the transuranic elements—those with atomic numbers greater than 92—only plutonium and neptunium occur naturally on Earth.[95][96] Transuranic elements have radioactive lifetimes shorter than the current age of the Earth[97] and thus identifiable quantities of these elements have long since decayed, with the exception of traces of Pu-244 possibly deposited by cosmic dust.[98] Natural deposits of plutonium and neptunium are generated by neutron capture in uranium ore.[99]

The Earth contains approximately 1.33 × 1050 atoms.[100] In the planet's atmosphere, trace amounts of independent atoms exist for the noble gases, such as argon and neon. The remaining 99% of the atomosphere is bound in the form of molecules, including carbon dioxide and diatomicoxygen and nitrogen. At the surface of the Earth, atoms combine to form various compounds, including water, salt, silicates and oxides. Atoms can also combine to create materials that do not consist of discrete molecules, including crystals and liquid or solid metals.[101][102] This atomic matter forms networked arrangements that lack the particular type of small-scale interrupted order that is associated with molecular matter. That is, they form small, strongly bound collections of atoms held to other collections of atoms by much weaker forces.[103]

Most molecules are made up of multiple atoms; for example, a molecule of water is a combination of two hydrogen atoms and one oxygen atom. The term 'molecule' in gases has been used as a synonym for the fundamental particles of the gas, whatever their structure. This definition results in a few types of gases (for example inert elements that do not form compounds, such as neon), which has 'molecules' consisting of only a single atom.[104]

Rare and theoretical forms

While isotopes with atomic number higher than lead (82) are known to be radioactive, a proposal has been made that an "island of stability" exists for some elements with atomic numbers above 103. These superheavy elements may have a nucleus that is relatively stable against radioactive decay.[105] The most likely candidate for a stable superheavy atom, unbihexium, has 126 protons and 184 neutrons.[106]

Each particle of matter has a corresponding antimatter particle with the opposite electrical charge. Thus the positron is a positively charged anti-electron and the antiproton is a negatively charged equivalent of a proton. For reasons that are not yet clear, antimatter particles are rare in the universe. Hence no antimatter atoms have yet been discovered.[107][108] Antihydrogen, the antimatter counterpart of hydrogen, was first produced at the CERN laboratory in Geneva in 1996.[109][110]

Other exotic atoms have been created by replacing one of the protons, neutrons or electrons with other particles that have the same charge. For example, an electron can be replaced by a more massive muon, forming a muonic atom. Atoms such as these can be used to test the fundamental predictions of physics.